LumaLabs.ai Dream Machine is an AI model that makes high-quality, realistic videos fast from text and images. It is a highly scalable and efficient transformer model trained directly on videos making it capable of generating physically accurate, consistent, and eventful shots.

To test this I created (using ChatGPT) a prompt that would blend the worlds of Star Wars and Star Trek into a pre-historic environment. This is the full text of the prompt I used –

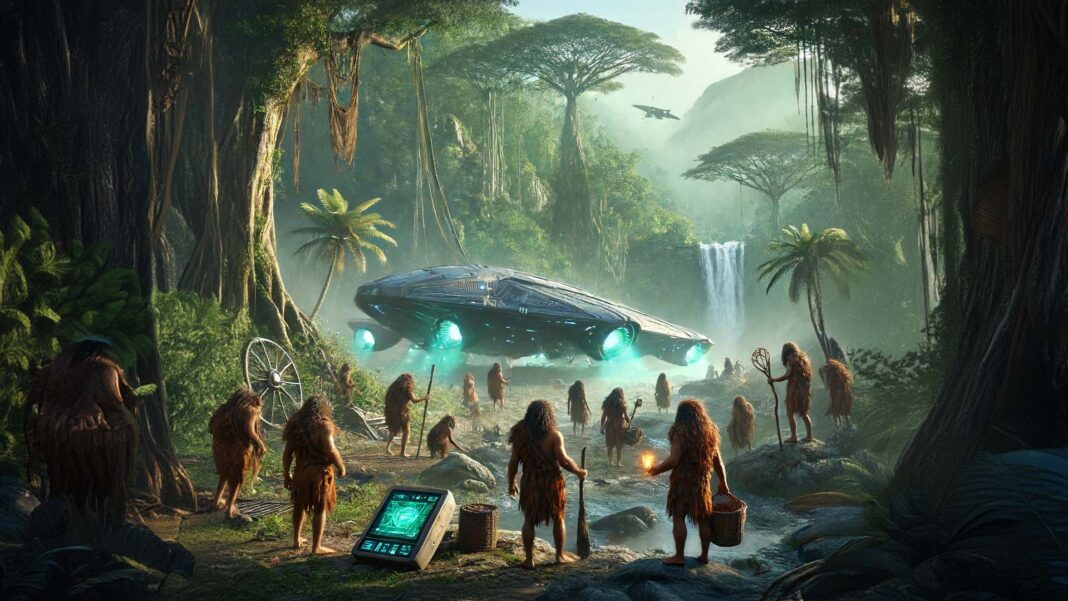

Imagine a prehistoric world where advanced cave people discover futuristic alien technology. The scene opens in a dense jungle, with towering trees and misty air. A group of cave people, dressed in primitive attire made from animal hides, stumble upon a glowing alien spacecraft partially buried in the ground.

As they cautiously approach, the ship’s doors open with a hiss, revealing sleek, metallic interiors with blinking lights and holographic displays. Inside, they find advanced tools and weapons resembling a mix of Star Wars lightsabers and Star Trek phasers. The cave people experiment with these devices, learning to harness their power.

Suddenly, the scene shifts to a dramatic battle against prehistoric creatures. The cave people, now wielding the alien technology, defend their territory from giant, menacing dinosaurs. They use lightsaber-like weapons to fend off the creatures and employ tactical maneuvers inspired by Star Trek’s strategic approaches.

The video culminates with the cave people establishing a new society, blending their primitive ways with the advanced technology, symbolizing the dawn of a new era of prehistoric sci-fi civilization.

And this was the result:

So the video? Terrible but maybe not the fault of the AI. I decided the prompt was too long and maybe included too much information so my second try was a little more basic but something I had reference videos of on this site. Could AI do a better flight over Dix Park than I could? Here is my prompt:

Drone flight over Dix Park highlighting the Big Field and Gipson Play Plaza.

And this was the result:

This was a much better and almost believable rendering. I mean the basic elements are there while not perfect it might fool someone who wasn’t at the park regularly. Perfect? No. Terrible? Not that either.

One last prompt of something honestly I would love to do – can LumaLabs make my idea real enough?

Can you show a first-person video of a high-speed drone flight through Raleigh, NC that is carefully choreographed through the St. Patrick’s Day Parade with flawless precision?

And the output:

I am not sure what happened here – in addition to the area looking nothing like Raleigh and maybe not even the parade they decided to add gibberish on the screen. Not sure what that is for or why but it is there.

Final takeaway? This is pretty cool for a 5 second video creator and while it isn’t great it is a great start. More text didn’t exactly get me more and less text didn’t really get me more either. I think the concepts are still rough but it did understand the base narrative on the long prompt and how to create some sense of flight in the two drone prompts. I’m still impressed. I am not 100% sure how I would use this tool today but am really glad it is being developed and look forward to crossing paths with it again in the near future.

P.S. – The the cover image was created by ChatGPT and then expanded into 1920×1080 size using Adobe Photoshop Beta using the first video prompt. I did this to see how different AI might interpret things differently.